Join our newsletter

Artificial Intelligence is reshaping the insurance industry at an unprecedented pace. From automating underwriting to enhancing fraud detection, AI is unlocking massive efficiencies and new capabilities. But as adoption accelerates, so does regulatory scrutiny. Around the world, governments are moving to ensure that AI-driven systems operate transparently, ethically, and without bias. For insurers, this creates both an opportunity and a mandate: to build technology infrastructures that are not only intelligent but also compliant by design. AI insurance regulation is now a critical driver of how that technology must be built.

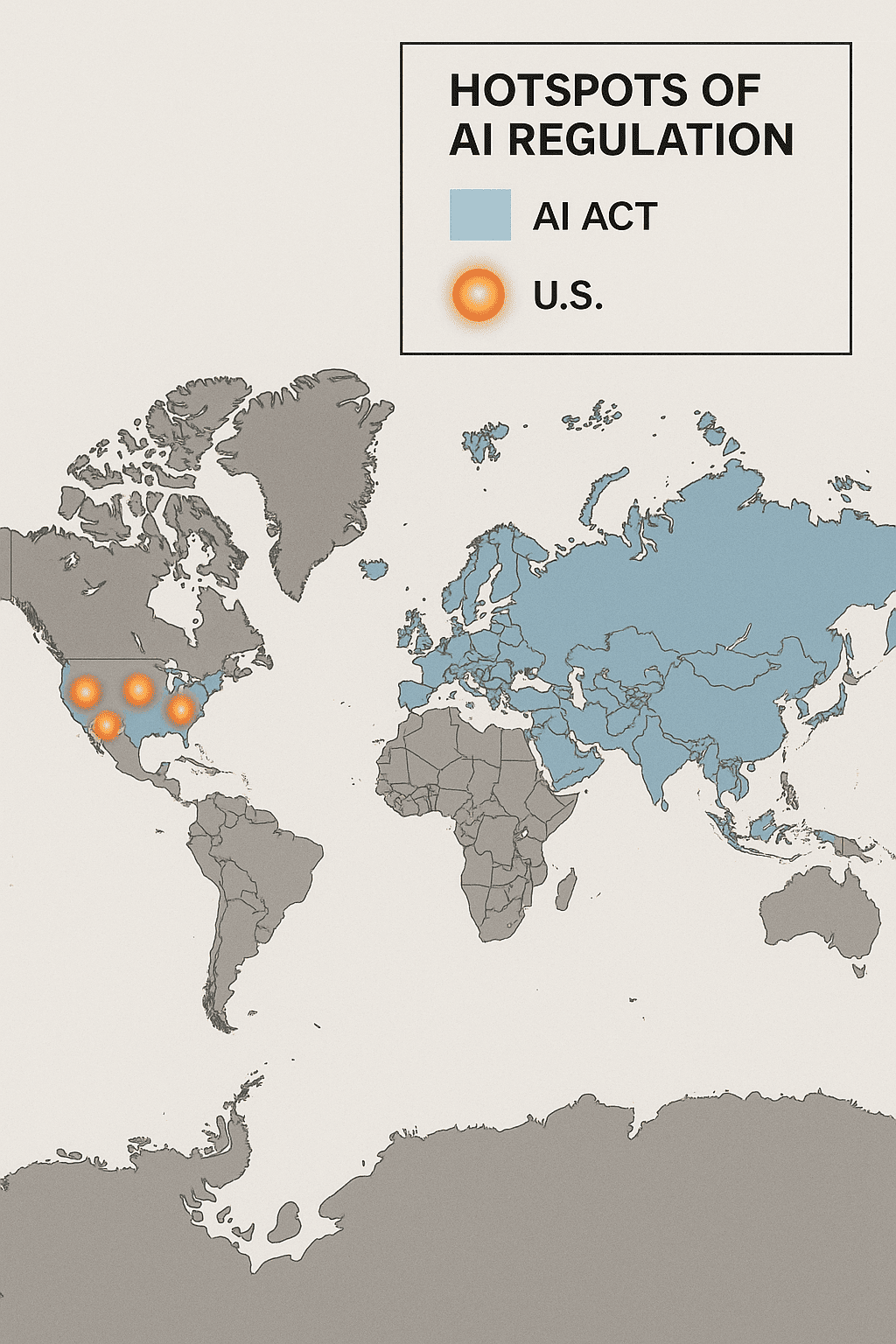

AI insurance regulation is no longer theoretical. In the European Union, the AI Act is already setting the benchmark for how high-risk applications—like those used in underwriting or risk profiling—must be governed. In the United States, regulators are watching closely, and early enforcement actions have already targeted discriminatory algorithmic practices in financial services. Insurers operating in or selling to regulated markets can’t afford to be reactive. The time to prepare is now.

Regulatory frameworks are also influencing AI statistics for 2025 and insurance technology trends for 2025 and beyond. AI insurance compliance is shaping how systems are built, tested, and deployed at scale. Automation alone is not enough; systems must now be compliance-ready out of the box.

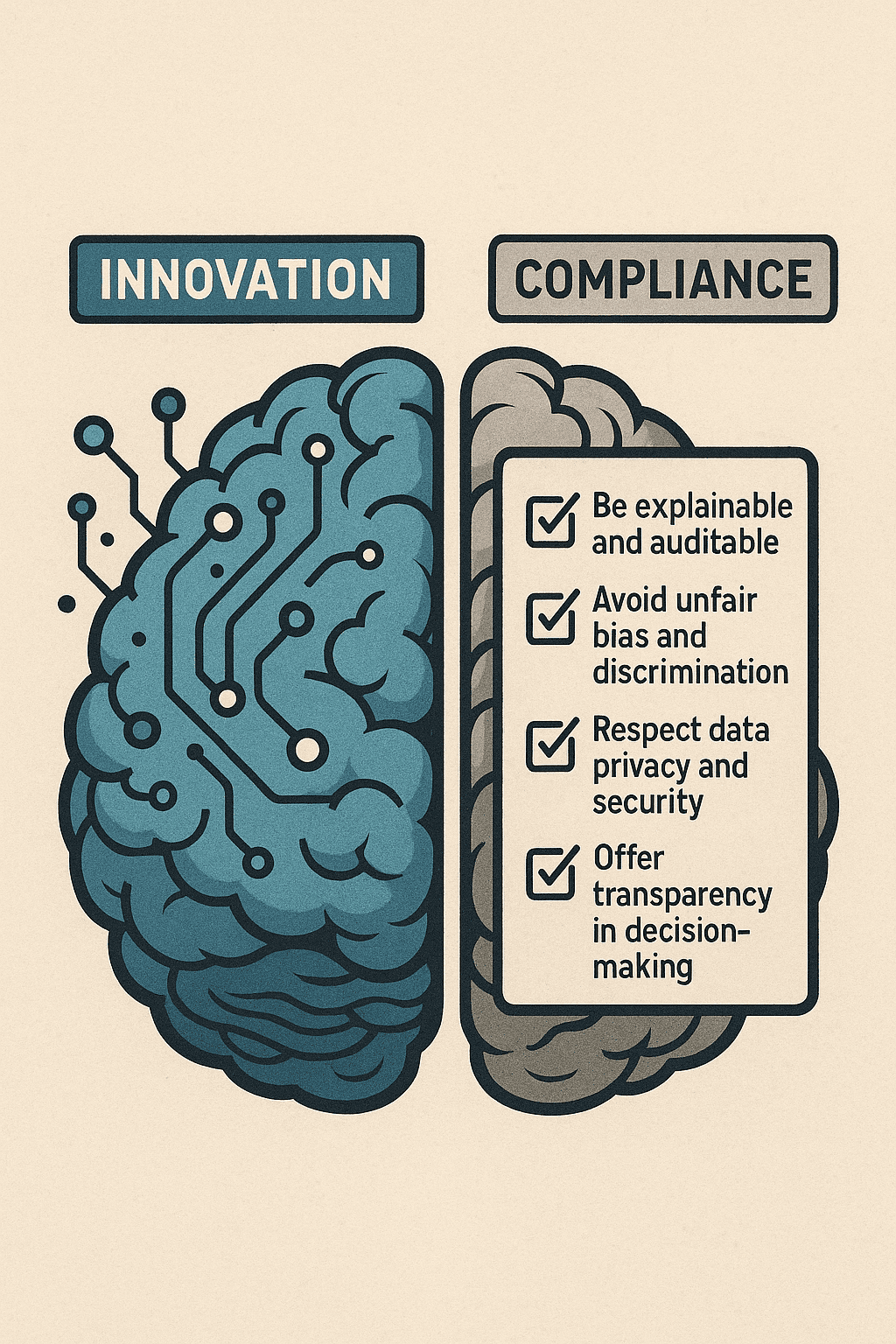

Building compliance-ready insurance technology starts with understanding the intent behind emerging AI regulatory frameworks. These frameworks are not designed to slow innovation but to ensure it happens responsibly. They typically require that AI systems:

Translating these principles into software architecture means rethinking how AI is developed, tested, and deployed. It’s not enough to plug in a model and hope for the best. Companies need AI governance in insurance that ensures responsible use at every stage of the lifecycle—from data ingestion to decision output.

A good starting point is to implement a robust model governance process. This includes documentation of training data sources, rationale for model choices, and regular audits to detect drift or bias. It also means making sure human oversight is built in, especially in decision points that affect policy eligibility or pricing. These are core challenges of AI in insurance underwriting that demand attention.

In addition, organizations should begin investing in internal education around AI ethics and regulation. Cross-functional training can help ensure that product teams, engineers, and compliance leads share a common understanding of what responsible AI looks like in practice. This reduces misalignment, avoids costly rework, and fosters a culture where compliance is seen as a design principle, not a blocker. For example, embracing smart automations can significantly enhance cross-functional velocity.

Designing for explainable AI in insurance is a cornerstone of compliance. Insurers should avoid “black box” models when outcomes have financial or legal implications. Instead, they should prioritize models that can clearly articulate why a certain decision was made. Tools like SHAP values or LIME can help surface which features contributed most to a particular output. Explainability isn’t just a regulatory checkbox—it builds trust with both regulators and policyholders.

Moreover, privacy laws like the GDPR and CCPA already impose strict data handling requirements—and these are increasingly being baked into AI insurance regulation. This means ensuring consent management, data minimization, and secure storage are baked into your AI systems from the start. Data privacy in AI insurance is not optional—it’s foundational.

This regulatory pressure also impacts vendor selection and third-party integrations. If your AI stack relies on external models or APIs, you’re still responsible for the outcomes. That makes transparency and accountability in your vendor ecosystem just as critical as your internal controls. This touches on the growing role of RegTech in insurance companies and why vendor governance is a key part of compliance strategies.

Of course, compliance doesn’t happen in a silo. It requires collaboration between product, legal, compliance, and engineering teams. Establishing shared accountability—and clear documentation at every stage—ensures that regulatory requirements are not just met, but anticipated. A comprehensive regulatory framework for AI in insurance helps align internal teams under a common operational and ethical standard.

One example of execution at scale can be seen in how automated onboarding transforms compliance-heavy workflows, without compromising speed or user experience.

The good news? Organizations that embrace regulatory readiness for AI early often discover competitive advantages. Compliance-ready systems tend to be more robust, trustworthy, and resilient—qualities that resonate with regulators, partners, and customers alike. These systems also reduce the risk of bias in insurance AI, which can otherwise lead to reputational and legal exposure.

In addition to meeting compliance standards, companies that prioritize regulatory alignment often build systems that are easier to maintain, scale, and improve over time. Strong documentation, audit trails, and transparent data pipelines contribute to better governance across the board—not just in the AI layer.

We’ve seen firsthand how industries transforming operations through AI unlock long-term resilience by designing for compliance early.

As regulatory landscapes evolve, so too will the expectations placed on AI systems in insurance. What’s considered responsible or compliant today may become mandatory tomorrow. By designing with compliance in mind from the outset, insurers can future-proof their technology stack and lead the market—not chase it. Staying ahead of insurance technology innovations in 2025 will depend on how well organizations integrate these principles now.

AI insurance regulation is not just a constraint. It’s a strategic inflection point. Companies that understand and act on this now won’t just avoid penalties—they’ll build trust, gain operational clarity, and unlock the full potential of AI. The next era of insurance technology won’t just be smart. It will be compliant by design.

If you’re exploring how to make your insurance systems compliance-ready from day one, we’re happy to share ideas, lessons, or simply start the conversation. Let’s connect.